Robovision 5.11: The Next Leap in Industrial AI

Date Section News

The end of another quarter brings the release of a new Robovision v5.11, an update that offers more freedom, flexibility and oversight than ever before.

This release expands intelligent robotics capabilities with major advancements in multi-camera capabilities, better data management and faster labeling.

Multi-Camera Mastery for Complex Vision (Alpha)

For applications that demand high-resolution and intricate 3D vision, Robovision v5.11 lays the foundation for seamless multi-camera integration.

- Synchronized Multi-Camera Support: Previously, multi-camera setups often relied on external, custom solutions. With 5.11 you can now trigger multiple cameras— for instance you could use 10 cameras to capture images simultaneously. This native platform support replaces the need for custom, external solutions, making it easier to cover larger work areas, enable future 3D applications, and integrate and manage demanding vision systems. Each set of captured multi-view samples is processed as a single unit where individual images are analyzed and is combined into a unified output for comprehensive analysis and object segmentation. Do note, the more cameras you connect, the more compute you'll need.

- Recording and Simulation: Now, you can record multi-view samples directly within the platform. This new feature allows you to simulate recordings at different frame rates, simplifying the testing and optimization of robot workflows using real-world data.

- Data Ingestion and Organization: The system can record from multiple cameras at once. Images are grouped into a single unit, called a "sample," or a collection of images in a "box." Metadata can be added to the box.

- Structured Labeling: The platform supports a tree-like structure for labeling, so a human labeler knows which image is being labeled in the box. The structure allows for tracking what has been labeled on each individual image.

- Inference Processing: The algorithm receives the entire box of images. It then runs inference on each image sequentially and combines the outputs into a single result for the entire box.

- Pluggable: capabilities are now in place for users to develop multi-camera algorithms.

Shape the Robovision AI Platform to Fit Your Operations – Like a Glove

Delivering new levels of customization and efficiency.

- Algo SDK: The Power of Customization: For the Data Scientists among you, we’ve given our Algo SDK a full-on architectural overhaul, an endeavor that started with previous release v5.10. As a result, SDK is cleaner, more robust, and significantly easier to use. While you won't see brand-new features, there is a significant difference in performance and reliability.

Under the hood, the platform has been upgraded to Pydantic 2, bringing a tremendous boost in speed and clearer error messaging, making it smoother (and faster) to integrate proprietary algorithms into the Robovision workflow.

But no worries, existing SDK algorithms are still fully supported, so you can transition at your own pace.

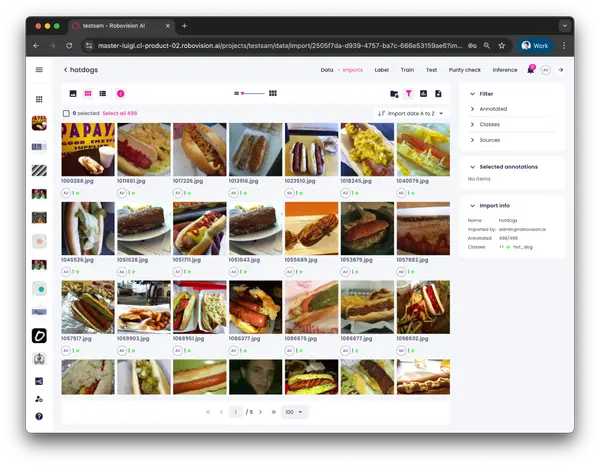

Metadata Magic: What Your Metadata Shows You

Our dedicated Data Center which was released with v5.10 now supports custom metadata filters. It’s no longer just seeing your metadata—it can actually help improve your models.

You can now filter your datasets with custom variables like machine_ID or temperature, capture_date, or operator_name, lot-number, etc…

Say you want to train a model using only data coming from Machine 2, captured by your best technician, and this during a specific date range? Now you can and as a result you’ll be able to curate the exact data you need for training. This lets you find the perfect samples to improve your models, ensuring you're always working with the most valuable data.

Faster labeling for Bounding Boxes

Building on powerful SAM segmentation, we’re also introducing support for drawing bounding boxes using a Segment Anything Model, designed to dramatically accelerate your data labeling and model training. This powerful integration generates highly accurate, automatic bounding boxes with a single click for object detection purposes. Simply upload your images, and let the advanced capabilities of a foundation labeling tool do the heavy lifting for you—identifying objects and drawing precise boxes in seconds. By leveraging SAM's state-of-the-art visual understanding, you reduce manual effort, ensure data consistency, and free up your team to focus on what matters most.

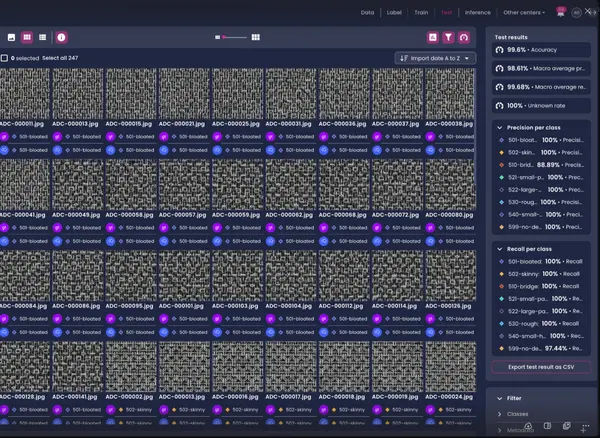

Expanding our Automatic Defect Classification with Multiclass Precision & Recall

An Accuracy-metric provides a single, simple number that anyone—whether an engineer or manager—can understand at a glance. Ideal for rapid comparison of two models on an unfamiliar problem ahead of full analysis, it does, however, present fundamentally misleading and non-deterministic insight. While the overall proportion of correctly classified instances is quantified, it fundamentally occludes critical failure modalities because it is blind to the importance of the prediction. It treats a successful classification of a common part (easy) the same as successfully finding a rare defect (hard), masking a critical failure to protect your quality standards.

With our v5.11 we’re now adding Precision & Recall to our Test Center; these two metrics are the industrial gold standard because they show how and why your model makes mistakes, giving you better control.

Precision (Per Class) – a Positive Predictive Value (PPV), quantifies the confidence level of all positive assertions (True Positives relative to all positives). If you translate this to the operational value proposition it means it minimizes the Total Cost of Ownership (TCO) related to scrap and unnecessary rework by enforcing the non-rejection of compliant items., (False Positive Reduction).

Recall (Per Class) - True Positive Rate (TPR) / Sensitivity, quantifies the system's capacity for complete defect identification. In operational lingo this means it mitigates Defect Escape Rate: Minimizes the missed defects (False Negatives) that propagate downstream, thereby safeguarding brand equity and mitigating liability risk.

By looking at Precision and Recall for every single defect type, you immediately see where your system is strong or weak. For example, you can compare how often your model correctly catches a "Crack Defect" (Recall) against how reliably it avoids false alarms when looking for a "Misaligned Label" (Precision). This allows you to find the system's weak spots and then fine-tune its settings (adjust the thresholds) so that your automated line always meets your specific quality goals and runs as efficiently as possible.

Improving our "Unknown-loop"

Robovision's "Unknown Loop" delivers a dual advantage: empowering you to monitor datadrift effectively while simultaneously performing Intelligent Data Capture.

However previously, samples failing to meet a preset class threshold were marked "unknown," and by doing so, overwriting crucial original class information. And yes, while it was easy to filter in the Label Center for relabeling, this approach sacrificed valuable meta-information, hindering traceability and obscuring the model's initial prediction. As a result, labelers lacked the context to understand why an item was deemed "unknown" in the first place.

With v5.11, we've introduced a new dedicated meta-field within the annotation. The "unknown" tag moved to this new meta-field, ensuring valuable inference data is no longer overwritten. Now, when your model makes a prediction (for instance, classifying an image as '97% dog, 90% cat, and 34% horse)—all the rich, granular class information is preserved.

This particular enhancement provides labelers with deeper insights into the model's decision-making process, which ultimately improves relabeling. You retain critical model information, enabling a far more focused and effective approach to the continuous improvement of your AI models.

Stay Ahead with the Latest Robovision Release

Robovision stands for continuous innovation, bringing you the latest advancements in AI to strengthen operations. By consistently deploying the newest release of the Robovision platform, you not only gain immediate access to powerful new features like a 375% (!!) speed increase in labeling with bounding boxes, custom data filtering using the labels that matter and the flexibility to handle multiple cameras, but you also ensure your systems remain at the forefront of the rapidly evolving AI landscape. Staying current means your models are always benefiting from our latest performance optimizations, security enhancements, and expanded capabilities, helping you maintain a competitive edge and realize even greater value from your Vision AI solutions.

Do you need help switching to v5.11? Get in touch.