3D Vision and AI Robotics: The Future of Intelligent Automation

Date Section Blog

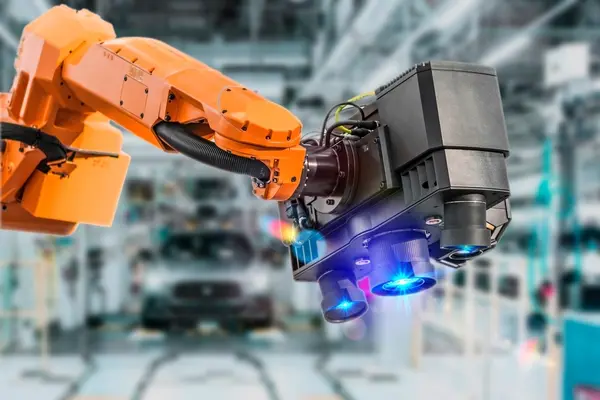

From factory floors to autonomous vehicles, 3D vision technology is transforming how machines perceive and interact with the world. Traditional vision systems have long played a role in automation, but new advancements—combined with artificial intelligence—are enabling robots to operate with unprecedented precision and adaptability.

By leveraging advanced cameras, depth sensors, and AI-powered processing, 3D vision AI systems understand depth, shape, and spatial positioning in real time. Various sectors already benefit from industrial 3D vision systems, from manufacturing and logistics to agriculture.

2D Vision vs. 3D Vision: Key Differences

Both 2D and 3D images are highly useful when training visual AI models. For decades, 2D vision systems have been widely used in industrial and agricultural automation. These 2D systems rely on flat, two-dimensional images to extract information, making them effective for tasks like barcode scanning, surface inspection, and basic object recognition. 2D imaging struggles with depth-related challenges, such as distinguishing overlapping leaves or assessing fruit ripeness based on shape.

As automation grows more complex, 3D vision systems are becoming essential. Unlike 2D systems, 3D technology captures depth, volume, and spatial information, allowing machines to interact more intelligently with real-world environments.

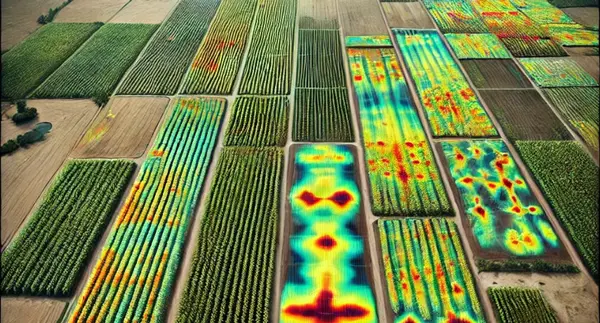

This is particularly useful in agriculture, where robots navigate uneven terrain, distinguish between healthy and diseased plants, and optimize harvesting by accurately detecting fruit location, health, and ripeness. In industrial settings, 3D vision improves robotic pick-and-place operations, quality inspection, and autonomous navigation.

2D Vision vs. 3D Vision: Quick Comparison

3D vision is not meant to replace 2D technologies—both are useful depending on the use case.

| 2D Vision | 3D Vision | |

|---|---|---|

| Image Type | Flat, grayscale, or color images | Depth-enhanced images with spatial data |

| Depth Perception | Not available | Captures height, width, and depth |

| Applications | Barcode scanning, OCR, surface inspection | Robotic guidance, object localization, volume measurement |

| AI Integration | AI assists with pattern recognition and classification | AI enables depth perception, object localization, and scene reconstruction |

| Limitations | Struggles with occlusions and overlapping objects | Requires advanced processing for real-time applications |

Understanding 3D Vision: Where Does AI Come In?

3D vision systems rely on depth sensors, cameras, and computational algorithms to analyze spatial information. Raw 3D data alone is not enough for intelligent automation. AI “teaches” machines to interpret and act on said 3D visual data.

Neural networks process point clouds, depth maps, and stereoscopic images to detect patterns, recognize objects, and estimate dimensions. Deep learning models enhance segmentation, feature extraction, and anomaly detection, making robotic action more accurate and adaptive.

Key Technologies in 3D Vision Systems

Different imaging techniques are used based on the automation task. Some provide highly detailed object recognition, while others excel in depth perception and spatial mapping for navigation.

- Stereo Vision – Uses two or more cameras at different angles to calculate depth by triangulating corresponding points in images, enabling 3D spatial reconstruction for robotics and automation.

- Structured Light Cameras – Project known light patterns (grids, stripes, dots) onto objects and measure distortions to generate precise 3D surface maps, commonly used for defect detection and object recognition.

- Time-of-Flight (ToF) Sensors – Emit infrared pulses and measure the time it takes for reflections to return, enabling real-time depth mapping for applications like robotic navigation and gesture recognition.

- Hyperspectral Imaging – Not inherently 3D imaging, but often paired with 3D systems. Captures hundreds of spectral bands beyond visible light, allowing AI to analyze material composition humans cannot see. Often used for applications in quality inspection, medical imaging, and security.

- Multispectral Imaging – Captures a limited number of spectral bands, providing less detailed spectral data than hyperspectral imaging but still useful for agricultural monitoring, remote sensing, and industrial quality control.

3D Vision and AI Robotics: Current and Future Applications

Industries with high variability, unstructured environments, and the need for detailed spatial awareness are driving the adoption of 3D vision and AI robotics. For example:

- Manufacturing: Industrial robots equipped with 3D vision systems perform precision assembly, defect detection, and automated quality control.

- Logistics: AI-powered 3D vision enables autonomous robots to navigate warehouses. For example, IKEA deploys drones across its warehouses to automate stock inventory processes and Proteus transports inventory and manages item sorting in Amazon warehouses.

- Agriculture: 3D vision technologies, including stereo vision and hyperspectral imaging, allow AI systems to assess crop health, automate harvesting, and improve resource efficiency.

While 3D vision and AI robotics are already transforming industries like manufacturing and logistics, several emerging markets are on the brink of widespread adoption. Some include:

- Healthcare: AI analysis of 3D imaging holds promise for robotic-assisted surgery and medical diagnostics. The industry faces challenges such as accuracy and regulatory approvals, which slow down widespread adoption.

- Autonomous Vehicles: Self-driving cars rely on 3D vision and AI for navigation and obstacle detection. While technological advancements continue, environmental unpredictability and sensor limitations still present ongoing challenges.

- Humanoid Robots: Several companies are developing humanoid robots meant to work in human-centric environments, such as warehouses, offices, and retail spaces. The robots are still in the development stages, but Tesla's Optimus robot is rumored to be released in 2025.

3D Vision and AI Robotics: Just the Beginning

3D vision and AI robotics are undoubtedly transforming automation by giving machines the ability to interpret depth, recognize complex objects, and navigate unpredictable environments. Industries like manufacturing, logistics, and agriculture are already integrating these technologies, improving productivity, reducing costs, and addressing labor shortages.

The potential of 3D vision and robotics in other industries is vast. And a time when humanoid robots employ space in everyday life seems to be just around the corner. As sensor technology, AI processing, and computing power continue to advance, 3D vision will become even more capable, redefining what machines can see, understand, and do.